FPGAs and MPSoCs are ideally suited for machine vision applications due to their ability to process large amounts of data in parallel and at high speeds. FPGAs can run highly power efficient neural network implementations and benefit from ultra low latency connections to multiple image sensors. Given the inherent strengths of FPGAs for machine vision, it surprises me that GPUs have become the dominant hardware platform for deep learning applications1 in recent years. In my opinion, most of the potential for FPGAs in machine vision remains to be exploited.

Over the last few weeks, we’ve been working on a new range of FMC products that I hope will enable more people to exploit the potential of FPGAs in machine vision applications. We’re calling this project and the products within it - Camera FMC. Our goal is to create the hardware and software that will allow people to connect cameras to FPGAs, with:

- Support for multiple FPGA and MPSoC development boards

- Multiple camera options from existing and open ecosystems

- Great documentation and support

- Reasonable cost

There’s a lot of work to be done, but we’re making good progress and I’m excited to share some information about two of these products today.

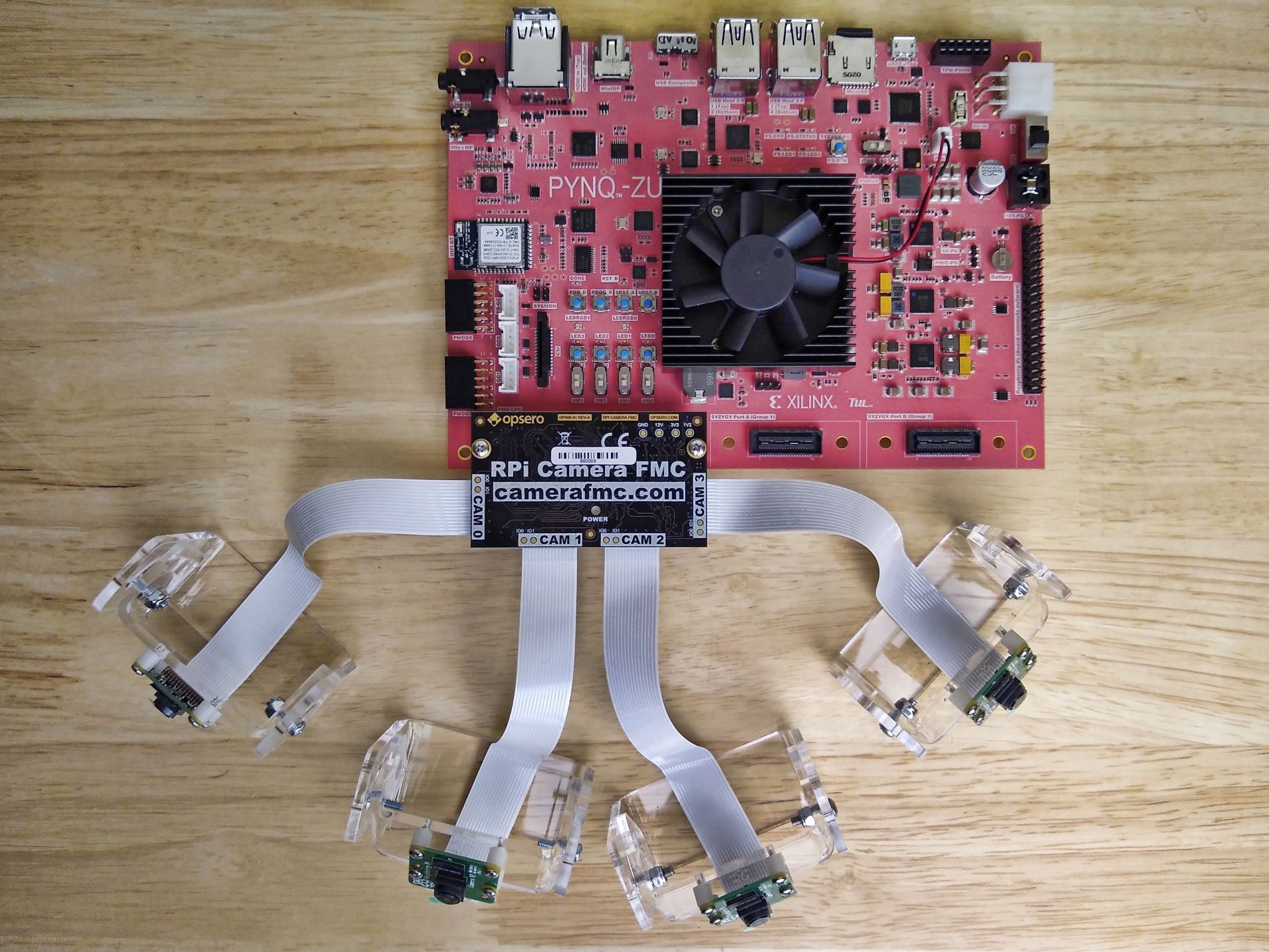

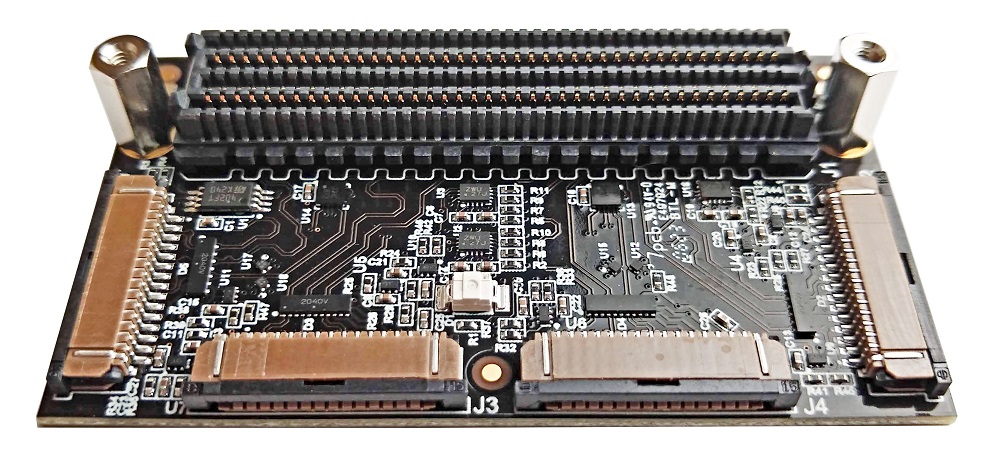

RPi Camera FMC

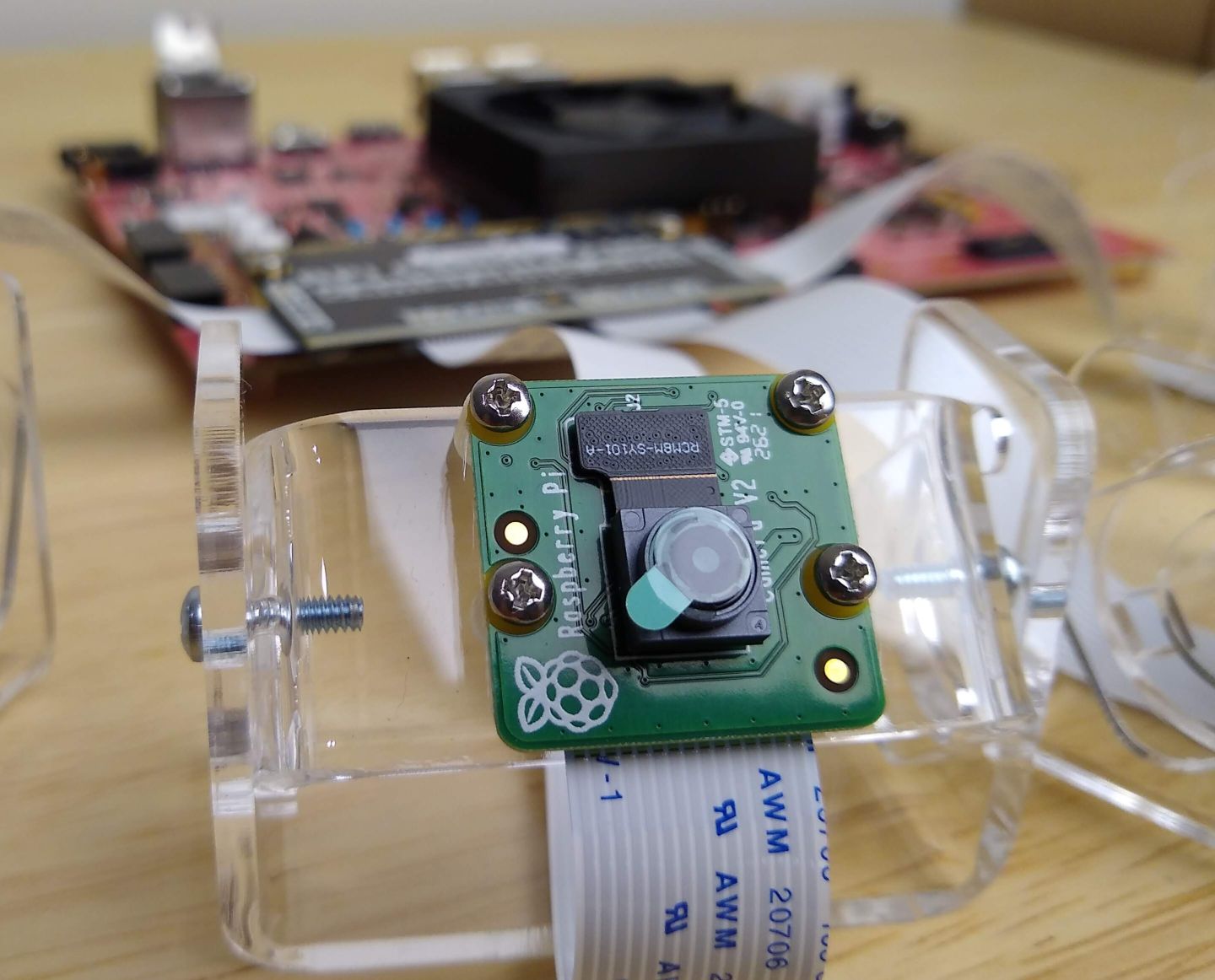

The RPi Camera FMC supports 6 different Zynq UltraScale+ development boards (see below for list) and allows connection to 4x Raspberry Pi cameras (2-lane MIPI). The example designs for this product are hosted on Github - RPi Camera FMC Example designs - so keep an eye on the repo for our latest developments. At the moment, the designs simply take the video streams from all 4 cameras and feed them to a DisplayPort monitor. Below are some photos of the first boards.

Depth Camera FMC

Now this is the product that I’m really excited about. The Depth Camera FMC looks pretty much like the RPi Camera FMC (above) but instead it connects to 4x Luxonis OAK-FFC cameras. The 26-pin Luxonis camera interface supports cameras with up to a 4 MIPI lanes, which means that it can be used with cameras of much higher resolution and/or frame rate than the RPi camera interface can. Currently we have (early stage) example designs for six different Zynq UltraScale+ boards (the ones listed below) and you can checkout the Github repo for more info on the specific camera support for each of the dev boards.

If you haven’t heard of Luxonis you should really check them out. These guys have developed some really impressive USB and PoE cameras, powered by Intel Myriad VPUs for real-time object detection and other AI applications on edge devices.

But let’s take a step back. As impressive as the USB and PoE cameras are, our Depth Camera FMC doesn’t connect to them. For use with FPGAs, we instead want to connect to the Luxonis OAK-FFC cameras - these are a range of bare board camera modules with flex cables attached to them. Luxonis has designed a range of these flex cable camera modules to be used with Luxonis baseboards, and what’s more they’ve open sourced them all. If I’ve still got your attention, then you probably have some questions:

Why not connect to the Luxonis USB and PoE cameras? You already can. If you want to run neural networks external to the FPGA, then you can plug a Luxonis USB or PoE camera directly into most FPGA/MPSoC dev boards (given most have a USB and/or Ethernet port). Once you get Linux running on the board, then you can simply install the DepthAI API from the command line and away you go. With the Depth Camera FMC, the developer’s objective is to do at least part of the video processing on the FPGA, and also to get the images into the FPGA fabric with the absolute lowest latency.

Why not connect to the Raspberry Pi 4-lane MIPI cameras via their 22-pin interface? The market offering for RPi 4-lane cameras is a bit limited due to the fact that most RPi models don’t have the compute power to deal with the throughput of a 4-lane MIPI camera anyway. This situation will no doubt improve with time, but there were a couple other issues with the 22-pin interface that I mention below.

Why use Luxonis OAK-FFC cameras? For a few reasons. They already have a good range of cameras on offer, and they are steadily growing the portfolio. They’ve designed a good physical interface for the cameras, which addresses a few issues with the RPi 22-pin interface - such as support for higher (power supply) currents and support for synchronization between multiple cameras. Finally, we chose Luxonis OAK-FFC cameras because Luxonis has open sourced the hardware designs of all of their camera modules, which is a tremendous advantage for developers.

Will Depth Camera FMC work with the DepthAI API? No. The Depth Camera FMC will only allow for direct connection of Luxonis OAK-FFC cameras to the FPGA fabric - it will not be loaded with the Intel Myriad VPU so we can’t leverage the DepthAI with this product alone. However at a later time I’ll share some ideas that might allow us to combine the power of FPGA with that of the Luxonis SoMs.

Supported boards

Below is the list of the Zynq UltraScale+ development boards that these two FMCs will support.

- AMD Xilinx ZCU104

- AMD Xilinx ZCU102

- AMD Xilinx ZCU106

- TUL PYNQ-ZU

- Digilent Genesys-ZU

- Avnet UltraZed-EV Starter Kit

The image below shows the RPi Camera FMC connected to the TUL PYNQ-ZU with four Raspberry Pi cameras connected.

If you’re interested in these products or you want more information, just get in touch.